1

.env

|

|

@ -6,3 +6,4 @@ WO_SSL_KEY=

|

|||

WO_SSL_CERT=

|

||||

WO_SSL_INSECURE_PORT_REDIRECT=80

|

||||

WO_DEBUG=YES

|

||||

WO_BROKER=redis://broker

|

||||

|

|

|

|||

|

|

@ -75,6 +75,7 @@ target/

|

|||

|

||||

# celery beat schedule file

|

||||

celerybeat-schedule

|

||||

celerybeat.pid

|

||||

|

||||

# dotenv

|

||||

.env

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

FROM python:3.5

|

||||

FROM python:3.6

|

||||

MAINTAINER Piero Toffanin <pt@masseranolabs.com>

|

||||

|

||||

ENV PYTHONUNBUFFERED 1

|

||||

|

|

@ -8,7 +8,7 @@ ENV PYTHONPATH $PYTHONPATH:/webodm

|

|||

RUN mkdir /webodm

|

||||

WORKDIR /webodm

|

||||

|

||||

RUN curl --silent --location https://deb.nodesource.com/setup_6.x | bash -

|

||||

RUN curl --silent --location https://deb.nodesource.com/setup_8.x | bash -

|

||||

RUN apt-get -qq install -y nodejs

|

||||

|

||||

# Configure use of testing branch of Debian

|

||||

|

|

@ -36,7 +36,7 @@ WORKDIR /webodm/nodeodm/external/node-OpenDroneMap

|

|||

RUN npm install --quiet

|

||||

|

||||

WORKDIR /webodm

|

||||

RUN npm install --quiet -g webpack && npm install --quiet && webpack

|

||||

RUN npm install --quiet -g webpack@3.11.0 && npm install --quiet && webpack

|

||||

RUN python manage.py collectstatic --noinput

|

||||

|

||||

RUN rm /webodm/webodm/secret_key.py

|

||||

|

|

|

|||

38

README.md

|

|

@ -21,6 +21,7 @@ A free, user-friendly, extendable application and [API](http://docs.webodm.org)

|

|||

* [Getting Help](#getting-help)

|

||||

* [Support the Project](#support-the-project)

|

||||

* [Become a Contributor](#become-a-contributor)

|

||||

* [Architecture Overview](#architecture-overview)

|

||||

* [Run the docker version as a Linux Service](#run-the-docker-version-as-a-linux-service)

|

||||

* [Run it natively](#run-it-natively)

|

||||

|

||||

|

|

@ -86,7 +87,7 @@ You **will not be able to distribute a single job across multiple processing nod

|

|||

If you want to run WebODM in production, make sure to pass the `--no-debug` flag while starting WebODM:

|

||||

|

||||

```bash

|

||||

./webodm.sh down && ./webodm.sh start --no-debug

|

||||

./webodm.sh restart --no-debug

|

||||

```

|

||||

|

||||

This will disable the `DEBUG` flag from `webodm/settings.py` within the docker container. This is [really important](https://docs.djangoproject.com/en/1.11/ref/settings/#std:setting-DEBUG).

|

||||

|

|

@ -100,7 +101,7 @@ WebODM has the ability to automatically request and install a SSL certificate vi

|

|||

- Run the following:

|

||||

|

||||

```bash

|

||||

./webodm.sh down && ./webodm.sh start --ssl --hostname webodm.myorg.com

|

||||

./webodm.sh restart --ssl --hostname webodm.myorg.com

|

||||

```

|

||||

|

||||

That's it! The certificate will automatically renew when needed.

|

||||

|

|

@ -112,7 +113,7 @@ If you want to specify your own key/certificate pair, simply pass the `--ssl-key

|

|||

When using Docker, all processing results are stored in a docker volume and are not available on the host filesystem. If you want to store your files on the host filesystem instead of a docker volume, you need to pass a path via the `--media-dir` option:

|

||||

|

||||

```bash

|

||||

./webodm.sh down && ./webodm.sh start --media-dir /home/user/webodm_data

|

||||

./webodm.sh restart --media-dir /home/user/webodm_data

|

||||

```

|

||||

|

||||

Note that existing task results will not be available after the change. Refer to the [Migrate Data Volumes](https://docs.docker.com/engine/tutorials/dockervolumes/#backup-restore-or-migrate-data-volumes) section of the Docker documentation for information on migrating existing task results.

|

||||

|

|

@ -123,7 +124,7 @@ Sympthoms | Possible Solutions

|

|||

--------- | ------------------

|

||||

While starting WebODM you get: `from six.moves import _thread as thread ImportError: cannot import name _thread` | Try running: `sudo pip install --ignore-installed six`

|

||||

While starting WebODM you get: `'WaitNamedPipe','The system cannot find the file specified.'` | 1. Make sure you have enabled VT-x virtualization in the BIOS.<br/>2. Try to downgrade your version of Python to 2.7

|

||||

While Accessing the WebODM interface you get: `OperationalError at / could not translate host name “db” to address: Name or service not known` or `ProgrammingError at / relation “auth_user” does not exist` | Try restarting your computer, then type: `./webodm.sh down && ./webodm.sh start`

|

||||

While Accessing the WebODM interface you get: `OperationalError at / could not translate host name “db” to address: Name or service not known` or `ProgrammingError at / relation “auth_user” does not exist` | Try restarting your computer, then type: `./webodm.sh restart`

|

||||

Task output or console shows one of the following:<ul><li>`MemoryError`</li><li>`Killed`</li></ul> | Make sure that your Docker environment has enough RAM allocated: [MacOS Instructions](http://stackoverflow.com/a/39720010), [Windows Instructions](https://docs.docker.com/docker-for-windows/#advanced)

|

||||

After an update, you get: `django.contrib.auth.models.DoesNotExist: Permission matching query does not exist.` | Try to remove your WebODM folder and start from a fresh git clone

|

||||

Task fails with `Process exited with code null`, no task console output - OR - console output shows `Illegal Instruction` | If the computer running node-opendronemap is using an old or 32bit CPU, you need to compile [OpenDroneMap](https://github.com/OpenDroneMap/OpenDroneMap) from sources and setup node-opendronemap natively. You cannot use docker. Docker images work with CPUs with 64-bit extensions, MMX, SSE, SSE2, SSE3 and SSSE3 instruction set support or higher.

|

||||

|

|

@ -191,7 +192,7 @@ Developer, I'm looking to build an app that will stay behind a firewall and just

|

|||

- [ ] Volumetric Measurements

|

||||

- [X] Cluster management and setup.

|

||||

- [ ] Mission Planner

|

||||

- [ ] Plugins/Webhooks System

|

||||

- [X] Plugins/Webhooks System

|

||||

- [X] API

|

||||

- [X] Documentation

|

||||

- [ ] Android Mobile App

|

||||

|

|

@ -239,6 +240,17 @@ When your first pull request is accepted, don't forget to fill [this form](https

|

|||

|

||||

<img src="https://user-images.githubusercontent.com/1951843/36511023-344f86b2-1733-11e8-8cae-236645db407b.png" alt="T-Shirt" width="50%">

|

||||

|

||||

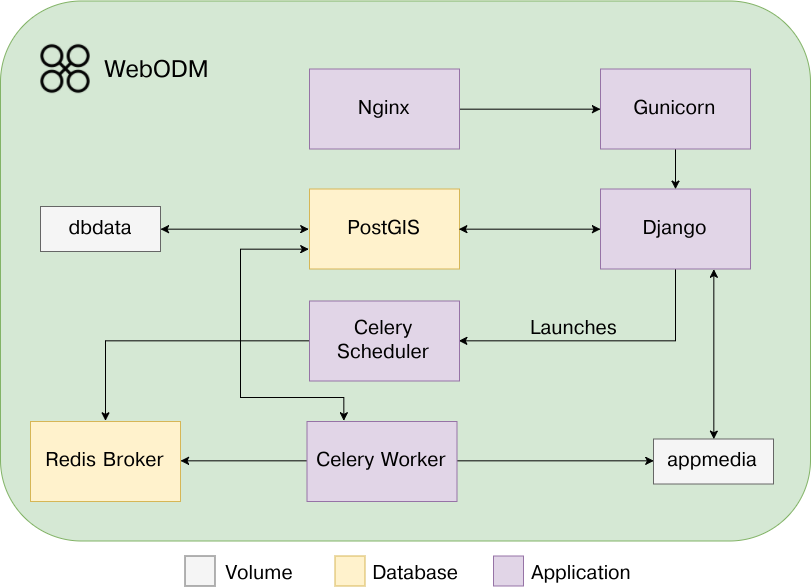

## Architecture Overview

|

||||

|

||||

WebODM is built with scalability and performance in mind. While the default setup places all databases and applications on the same machine, users can separate its components for increased performance (ex. place a Celery worker on a separate machine for running background tasks).

|

||||

|

||||

|

||||

|

||||

A few things to note:

|

||||

* We use Celery workers to do background tasks such as resizing images and processing task results, but we use an ad-hoc scheduling mechanism to communicate with node-OpenDroneMap (which processes the orthophotos, 3D models, etc.). The choice to use two separate systems for task scheduling is due to the flexibility that an ad-hoc mechanism gives us for certain operations (capture task output, persistent data and ability to restart tasks mid-way, communication via REST calls, etc.).

|

||||

* If loaded on multiple machines, Celery workers should all share their `app/media` directory with the Django application (via network shares). You can manage workers via `./worker.sh`

|

||||

|

||||

|

||||

## Run the docker version as a Linux Service

|

||||

|

||||

If you wish to run the docker version with auto start/monitoring/stop, etc, as a systemd style Linux Service, a systemd unit file is included in the service folder of the repo.

|

||||

|

|

@ -288,6 +300,7 @@ To run WebODM, you will need to install:

|

|||

* GDAL (>= 2.1)

|

||||

* Node.js (>= 6.0)

|

||||

* Nginx (Linux/MacOS) - OR - Apache + mod_wsgi (Windows)

|

||||

* Redis (>= 2.6)

|

||||

|

||||

On Linux, make sure you have:

|

||||

|

||||

|

|

@ -329,17 +342,29 @@ ALTER SYSTEM SET postgis.enable_outdb_rasters TO True;

|

|||

ALTER SYSTEM SET postgis.gdal_enabled_drivers TO 'GTiff';

|

||||

```

|

||||

|

||||

Start the redis broker:

|

||||

|

||||

```bash

|

||||

redis-server

|

||||

```

|

||||

|

||||

Then:

|

||||

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

sudo npm install -g webpack

|

||||

sudo npm install -g webpack@3.11.0

|

||||

npm install

|

||||

webpack

|

||||

python manage.py collectstatic --noinput

|

||||

chmod +x start.sh && ./start.sh --no-gunicorn

|

||||

```

|

||||

|

||||

Finally, start at least one celery worker:

|

||||

|

||||

```bash

|

||||

./worker.sh start

|

||||

```

|

||||

|

||||

The `start.sh` script will use Django's built-in server if you pass the `--no-gunicorn` parameter. This is good for testing, but bad for production.

|

||||

|

||||

In production, if you have nginx installed, modify the configuration file in `nginx/nginx.conf` to match your system's configuration and just run `start.sh` without parameters.

|

||||

|

|

@ -372,5 +397,6 @@ python --version

|

|||

pip --version

|

||||

npm --version

|

||||

gdalinfo --version

|

||||

redis-server --version

|

||||

```

|

||||

Should all work without errors.

|

||||

|

|

|

|||

1

VERSION

|

|

@ -1 +0,0 @@

|

|||

0.4.1

|

||||

|

|

@ -1,24 +1,20 @@

|

|||

import mimetypes

|

||||

import os

|

||||

from wsgiref.util import FileWrapper

|

||||

|

||||

from django.contrib.gis.db.models import GeometryField

|

||||

from django.contrib.gis.db.models.functions import Envelope

|

||||

from django.core.exceptions import ObjectDoesNotExist, SuspiciousFileOperation, ValidationError

|

||||

from django.db import transaction

|

||||

from django.db.models.functions import Cast

|

||||

from django.http import HttpResponse

|

||||

from wsgiref.util import FileWrapper

|

||||

from rest_framework import status, serializers, viewsets, filters, exceptions, permissions, parsers

|

||||

from rest_framework.decorators import detail_route

|

||||

from rest_framework.permissions import IsAuthenticatedOrReadOnly

|

||||

from rest_framework.response import Response

|

||||

from rest_framework.decorators import detail_route

|

||||

from rest_framework.views import APIView

|

||||

|

||||

from nodeodm import status_codes

|

||||

from .common import get_and_check_project, get_tile_json, path_traversal_check

|

||||

|

||||

from app import models, scheduler, pending_actions

|

||||

from app import models, pending_actions

|

||||

from nodeodm.models import ProcessingNode

|

||||

from worker import tasks as worker_tasks

|

||||

from .common import get_and_check_project, get_tile_json, path_traversal_check

|

||||

|

||||

|

||||

class TaskIDsSerializer(serializers.BaseSerializer):

|

||||

|

|

@ -84,8 +80,8 @@ class TaskViewSet(viewsets.ViewSet):

|

|||

task.last_error = None

|

||||

task.save()

|

||||

|

||||

# Call the scheduler (speed things up)

|

||||

scheduler.process_pending_tasks(background=True)

|

||||

# Process task right away

|

||||

worker_tasks.process_task.delay(task.id)

|

||||

|

||||

return Response({'success': True})

|

||||

|

||||

|

|

@ -149,7 +145,8 @@ class TaskViewSet(viewsets.ViewSet):

|

|||

raise exceptions.ValidationError(detail="Cannot create task, you need at least 2 images")

|

||||

|

||||

with transaction.atomic():

|

||||

task = models.Task.objects.create(project=project)

|

||||

task = models.Task.objects.create(project=project,

|

||||

pending_action=pending_actions.RESIZE if 'resize_to' in request.data else None)

|

||||

|

||||

for image in files:

|

||||

models.ImageUpload.objects.create(task=task, image=image)

|

||||

|

|

@ -159,7 +156,9 @@ class TaskViewSet(viewsets.ViewSet):

|

|||

serializer.is_valid(raise_exception=True)

|

||||

serializer.save()

|

||||

|

||||

return Response(serializer.data, status=status.HTTP_201_CREATED)

|

||||

worker_tasks.process_task.delay(task.id)

|

||||

|

||||

return Response(serializer.data, status=status.HTTP_201_CREATED)

|

||||

|

||||

|

||||

def update(self, request, pk=None, project_pk=None, partial=False):

|

||||

|

|

@ -180,8 +179,8 @@ class TaskViewSet(viewsets.ViewSet):

|

|||

serializer.is_valid(raise_exception=True)

|

||||

serializer.save()

|

||||

|

||||

# Call the scheduler (speed things up)

|

||||

scheduler.process_pending_tasks(background=True)

|

||||

# Process task right away

|

||||

worker_tasks.process_task.delay(task.id)

|

||||

|

||||

return Response(serializer.data)

|

||||

|

||||

|

|

|

|||

|

|

@ -1,40 +0,0 @@

|

|||

from threading import Thread

|

||||

|

||||

import logging

|

||||

from django import db

|

||||

from app.testwatch import testWatch

|

||||

|

||||

logger = logging.getLogger('app.logger')

|

||||

|

||||

def background(func):

|

||||

"""

|

||||

Adds background={True|False} param to any function

|

||||

so that we can call update_nodes_info(background=True) from the outside

|

||||

"""

|

||||

def wrapper(*args,**kwargs):

|

||||

background = kwargs.get('background', False)

|

||||

if 'background' in kwargs: del kwargs['background']

|

||||

|

||||

if background:

|

||||

if testWatch.hook_pre(func, *args, **kwargs): return

|

||||

|

||||

# Create a function that closes all

|

||||

# db connections at the end of the thread

|

||||

# This is necessary to make sure we don't leave

|

||||

# open connections lying around.

|

||||

def execute_and_close_db():

|

||||

ret = None

|

||||

try:

|

||||

ret = func(*args, **kwargs)

|

||||

finally:

|

||||

db.connections.close_all()

|

||||

testWatch.hook_post(func, *args, **kwargs)

|

||||

return ret

|

||||

|

||||

t = Thread(target=execute_and_close_db)

|

||||

t.daemon = True

|

||||

t.start()

|

||||

return t

|

||||

else:

|

||||

return func(*args, **kwargs)

|

||||

return wrapper

|

||||

31

app/boot.py

|

|

@ -1,5 +1,6 @@

|

|||

import os

|

||||

|

||||

import kombu

|

||||

from django.contrib.auth.models import Permission

|

||||

from django.contrib.auth.models import User, Group

|

||||

from django.core.exceptions import ObjectDoesNotExist

|

||||

|

|

@ -7,12 +8,14 @@ from django.core.files import File

|

|||

from django.db.utils import ProgrammingError

|

||||

from guardian.shortcuts import assign_perm

|

||||

|

||||

from worker import tasks as worker_tasks

|

||||

from app.models import Preset

|

||||

from app.models import Theme

|

||||

from app.plugins import register_plugins

|

||||

from nodeodm.models import ProcessingNode

|

||||

# noinspection PyUnresolvedReferences

|

||||

from webodm.settings import MEDIA_ROOT

|

||||

from . import scheduler, signals

|

||||

from . import signals

|

||||

import logging

|

||||

from .models import Task, Setting

|

||||

from webodm import settings

|

||||

|

|

@ -21,12 +24,14 @@ from webodm.wsgi import booted

|

|||

|

||||

def boot():

|

||||

# booted is a shared memory variable to keep track of boot status

|

||||

# as multiple workers could trigger the boot sequence twice

|

||||

# as multiple gunicorn workers could trigger the boot sequence twice

|

||||

if not settings.DEBUG and booted.value: return

|

||||

|

||||

booted.value = True

|

||||

logger = logging.getLogger('app.logger')

|

||||

|

||||

logger.info("Booting WebODM {}".format(settings.VERSION))

|

||||

|

||||

if settings.DEBUG:

|

||||

logger.warning("Debug mode is ON (for development this is OK)")

|

||||

|

||||

|

|

@ -57,17 +62,16 @@ def boot():

|

|||

|

||||

# Add default presets

|

||||

Preset.objects.get_or_create(name='DSM + DTM', system=True,

|

||||

options=[{'name': 'dsm', 'value': True}, {'name': 'dtm', 'value': True}])

|

||||

options=[{'name': 'dsm', 'value': True}, {'name': 'dtm', 'value': True}, {'name': 'mesh-octree-depth', 'value': 11}])

|

||||

Preset.objects.get_or_create(name='Fast Orthophoto', system=True,

|

||||

options=[{'name': 'fast-orthophoto', 'value': True}])

|

||||

Preset.objects.get_or_create(name='High Quality', system=True,

|

||||

options=[{'name': 'dsm', 'value': True},

|

||||

{'name': 'skip-resize', 'value': True},

|

||||

{'name': 'mesh-octree-depth', 'value': "12"},

|

||||

{'name': 'use-25dmesh', 'value': True},

|

||||

{'name': 'min-num-features', 'value': 8000},

|

||||

{'name': 'dem-resolution', 'value': "0.04"},

|

||||

{'name': 'orthophoto-resolution', 'value': "60"},

|

||||

{'name': 'orthophoto-resolution', 'value': "40"},

|

||||

])

|

||||

Preset.objects.get_or_create(name='Default', system=True, options=[{'name': 'dsm', 'value': True}])

|

||||

Preset.objects.get_or_create(name='Default', system=True, options=[{'name': 'dsm', 'value': True}, {'name': 'mesh-octree-depth', 'value': 11}])

|

||||

|

||||

# Add settings

|

||||

default_theme, created = Theme.objects.get_or_create(name='Default')

|

||||

|

|

@ -87,11 +91,14 @@ def boot():

|

|||

# Unlock any Task that might have been locked

|

||||

Task.objects.filter(processing_lock=True).update(processing_lock=False)

|

||||

|

||||

if not settings.TESTING:

|

||||

# Setup and start scheduler

|

||||

scheduler.setup()

|

||||

register_plugins()

|

||||

|

||||

if not settings.TESTING:

|

||||

try:

|

||||

worker_tasks.update_nodes_info.delay()

|

||||

except kombu.exceptions.OperationalError as e:

|

||||

logger.error("Cannot connect to celery broker at {}. Make sure that your redis-server is running at that address: {}".format(settings.CELERY_BROKER_URL, str(e)))

|

||||

|

||||

scheduler.update_nodes_info(background=True)

|

||||

|

||||

except ProgrammingError:

|

||||

logger.warning("Could not touch the database. If running a migration, this is expected.")

|

||||

|

|

@ -0,0 +1,6 @@

|

|||

+proj=utm +zone=15 +ellps=WGS84 +datum=WGS84 +units=m +no_defs

|

||||

576529.22 5188003.22 0 4 6 tiny_drone_image.JPG

|

||||

576529.25 5188003.25 0 7.75 8.25 tiny_drone_image.JPG

|

||||

576529.22 5188003.22 0 4 6 tiny_drone_image_2.jpg

|

||||

576529.27 5188003.27 0 8.19 8.42 tiny_drone_image_2.jpg

|

||||

576529.27 5188003.27 0 8 8 missing_image.jpg

|

||||

|

|

@ -0,0 +1,4 @@

|

|||

|

||||

<O_O>

|

||||

1 2 3 4 5 6

|

||||

1 hello 3 hello 5 6

|

||||

|

|

@ -0,0 +1,25 @@

|

|||

# -*- coding: utf-8 -*-

|

||||

# Generated by Django 1.11.7 on 2018-02-19 19:46

|

||||

from __future__ import unicode_literals

|

||||

|

||||

from django.db import migrations, models

|

||||

|

||||

|

||||

class Migration(migrations.Migration):

|

||||

|

||||

dependencies = [

|

||||

('app', '0016_public_task_uuids'),

|

||||

]

|

||||

|

||||

operations = [

|

||||

migrations.AddField(

|

||||

model_name='task',

|

||||

name='resize_to',

|

||||

field=models.IntegerField(default=-1, help_text='When set to a value different than -1, indicates that the images for this task have been / will be resized to the size specified here before processing.'),

|

||||

),

|

||||

migrations.AlterField(

|

||||

model_name='task',

|

||||

name='pending_action',

|

||||

field=models.IntegerField(blank=True, choices=[(1, 'CANCEL'), (2, 'REMOVE'), (3, 'RESTART'), (4, 'RESIZE')], db_index=True, help_text='A requested action to be performed on the task. The selected action will be performed by the worker at the next iteration.', null=True),

|

||||

),

|

||||

]

|

||||

|

|

@ -32,7 +32,7 @@ class Project(models.Model):

|

|||

super().delete(*args)

|

||||

else:

|

||||

# Need to remove all tasks before we can remove this project

|

||||

# which will be deleted on the scheduler after pending actions

|

||||

# which will be deleted by workers after pending actions

|

||||

# have been completed

|

||||

self.task_set.update(pending_action=pending_actions.REMOVE)

|

||||

self.deleting = True

|

||||

|

|

|

|||

|

|

@ -4,6 +4,12 @@ import shutil

|

|||

import zipfile

|

||||

import uuid as uuid_module

|

||||

|

||||

import json

|

||||

from shlex import quote

|

||||

|

||||

import piexif

|

||||

import re

|

||||

from PIL import Image

|

||||

from django.contrib.gis.gdal import GDALRaster

|

||||

from django.contrib.gis.gdal import OGRGeometry

|

||||

from django.contrib.gis.geos import GEOSGeometry

|

||||

|

|

@ -22,6 +28,11 @@ from nodeodm.models import ProcessingNode

|

|||

from webodm import settings

|

||||

from .project import Project

|

||||

|

||||

from functools import partial

|

||||

from multiprocessing import cpu_count

|

||||

from concurrent.futures import ThreadPoolExecutor

|

||||

import subprocess

|

||||

|

||||

logger = logging.getLogger('app.logger')

|

||||

|

||||

|

||||

|

|

@ -57,6 +68,47 @@ def validate_task_options(value):

|

|||

raise ValidationError("Invalid options")

|

||||

|

||||

|

||||

|

||||

def resize_image(image_path, resize_to):

|

||||

try:

|

||||

im = Image.open(image_path)

|

||||

path, ext = os.path.splitext(image_path)

|

||||

resized_image_path = os.path.join(path + '.resized' + ext)

|

||||

|

||||

width, height = im.size

|

||||

max_side = max(width, height)

|

||||

if max_side < resize_to:

|

||||

logger.warning('You asked to make {} bigger ({} --> {}), but we are not going to do that.'.format(image_path, max_side, resize_to))

|

||||

im.close()

|

||||

return {'path': image_path, 'resize_ratio': 1}

|

||||

|

||||

ratio = float(resize_to) / float(max_side)

|

||||

resized_width = int(width * ratio)

|

||||

resized_height = int(height * ratio)

|

||||

|

||||

im.thumbnail((resized_width, resized_height), Image.LANCZOS)

|

||||

|

||||

if 'exif' in im.info:

|

||||

exif_dict = piexif.load(im.info['exif'])

|

||||

exif_dict['Exif'][piexif.ExifIFD.PixelXDimension] = resized_width

|

||||

exif_dict['Exif'][piexif.ExifIFD.PixelYDimension] = resized_height

|

||||

im.save(resized_image_path, "JPEG", exif=piexif.dump(exif_dict), quality=100)

|

||||

else:

|

||||

im.save(resized_image_path, "JPEG", quality=100)

|

||||

|

||||

im.close()

|

||||

|

||||

# Delete original image, rename resized image to original

|

||||

os.remove(image_path)

|

||||

os.rename(resized_image_path, image_path)

|

||||

|

||||

logger.info("Resized {} to {}x{}".format(image_path, resized_width, resized_height))

|

||||

except IOError as e:

|

||||

logger.warning("Cannot resize {}: {}.".format(image_path, str(e)))

|

||||

return None

|

||||

|

||||

return {'path': image_path, 'resize_ratio': ratio}

|

||||

|

||||

class Task(models.Model):

|

||||

ASSETS_MAP = {

|

||||

'all.zip': 'all.zip',

|

||||

|

|

@ -85,6 +137,7 @@ class Task(models.Model):

|

|||

(pending_actions.CANCEL, 'CANCEL'),

|

||||

(pending_actions.REMOVE, 'REMOVE'),

|

||||

(pending_actions.RESTART, 'RESTART'),

|

||||

(pending_actions.RESIZE, 'RESIZE'),

|

||||

)

|

||||

|

||||

id = models.UUIDField(primary_key=True, default=uuid_module.uuid4, unique=True, serialize=False, editable=False)

|

||||

|

|

@ -109,9 +162,10 @@ class Task(models.Model):

|

|||

|

||||

# mission

|

||||

created_at = models.DateTimeField(default=timezone.now, help_text="Creation date")

|

||||

pending_action = models.IntegerField(choices=PENDING_ACTIONS, db_index=True, null=True, blank=True, help_text="A requested action to be performed on the task. The selected action will be performed by the scheduler at the next iteration.")

|

||||

pending_action = models.IntegerField(choices=PENDING_ACTIONS, db_index=True, null=True, blank=True, help_text="A requested action to be performed on the task. The selected action will be performed by the worker at the next iteration.")

|

||||

|

||||

public = models.BooleanField(default=False, help_text="A flag indicating whether this task is available to the public")

|

||||

resize_to = models.IntegerField(default=-1, help_text="When set to a value different than -1, indicates that the images for this task have been / will be resized to the size specified here before processing.")

|

||||

|

||||

|

||||

def __init__(self, *args, **kwargs):

|

||||

|

|

@ -172,9 +226,14 @@ class Task(models.Model):

|

|||

"""

|

||||

Get a path relative to the place where assets are stored

|

||||

"""

|

||||

return self.task_path("assets", *args)

|

||||

|

||||

def task_path(self, *args):

|

||||

"""

|

||||

Get path relative to the root task directory

|

||||

"""

|

||||

return os.path.join(settings.MEDIA_ROOT,

|

||||

assets_directory_path(self.id, self.project.id, ""),

|

||||

"assets",

|

||||

*args)

|

||||

|

||||

def is_asset_available_slow(self, asset):

|

||||

|

|

@ -221,12 +280,18 @@ class Task(models.Model):

|

|||

def process(self):

|

||||

"""

|

||||

This method contains the logic for processing tasks asynchronously

|

||||

from a background thread or from the scheduler. Here tasks that are

|

||||

from a background thread or from a worker. Here tasks that are

|

||||

ready to be processed execute some logic. This could be communication

|

||||

with a processing node or executing a pending action.

|

||||

"""

|

||||

|

||||

try:

|

||||

if self.pending_action == pending_actions.RESIZE:

|

||||

resized_images = self.resize_images()

|

||||

self.resize_gcp(resized_images)

|

||||

self.pending_action = None

|

||||

self.save()

|

||||

|

||||

if self.auto_processing_node and not self.status in [status_codes.FAILED, status_codes.CANCELED]:

|

||||

# No processing node assigned and need to auto assign

|

||||

if self.processing_node is None:

|

||||

|

|

@ -507,7 +572,6 @@ class Task(models.Model):

|

|||

except FileNotFoundError as e:

|

||||

logger.warning(e)

|

||||

|

||||

|

||||

def set_failure(self, error_message):

|

||||

logger.error("FAILURE FOR {}: {}".format(self, error_message))

|

||||

self.last_error = error_message

|

||||

|

|

@ -515,8 +579,61 @@ class Task(models.Model):

|

|||

self.pending_action = None

|

||||

self.save()

|

||||

|

||||

def find_all_files_matching(self, regex):

|

||||

directory = full_task_directory_path(self.id, self.project.id)

|

||||

return [os.path.join(directory, f) for f in os.listdir(directory) if

|

||||

re.match(regex, f, re.IGNORECASE)]

|

||||

|

||||

def resize_images(self):

|

||||

"""

|

||||

Destructively resize this task's JPG images while retaining EXIF tags.

|

||||

Resulting images are always converted to JPG.

|

||||

TODO: add support for tiff files

|

||||

:return list containing paths of resized images and resize ratios

|

||||

"""

|

||||

if self.resize_to < 0:

|

||||

logger.warning("We were asked to resize images to {}, this might be an error.".format(self.resize_to))

|

||||

return []

|

||||

|

||||

images_path = self.find_all_files_matching(r'.*\.jpe?g$')

|

||||

|

||||

with ThreadPoolExecutor(max_workers=cpu_count()) as executor:

|

||||

resized_images = list(filter(lambda i: i is not None, executor.map(

|

||||

partial(resize_image, resize_to=self.resize_to),

|

||||

images_path)))

|

||||

|

||||

return resized_images

|

||||

|

||||

def resize_gcp(self, resized_images):

|

||||

"""

|

||||

Destructively change this task's GCP file (if any)

|

||||

by resizing the location of GCP entries.

|

||||

:param resized_images: list of objects having "path" and "resize_ratio" keys

|

||||

for example [{'path': 'path/to/DJI_0018.jpg', 'resize_ratio': 0.25}, ...]

|

||||

:return: path to changed GCP file or None if no GCP file was found/changed

|

||||

"""

|

||||

gcp_path = self.find_all_files_matching(r'.*\.txt$')

|

||||

if len(gcp_path) == 0: return None

|

||||

|

||||

# Assume we only have a single GCP file per task

|

||||

gcp_path = gcp_path[0]

|

||||

resize_script_path = os.path.join(settings.BASE_DIR, 'app', 'scripts', 'resize_gcp.js')

|

||||

|

||||

dict = {}

|

||||

for ri in resized_images:

|

||||

dict[os.path.basename(ri['path'])] = ri['resize_ratio']

|

||||

|

||||

try:

|

||||

new_gcp_content = subprocess.check_output("node {} {} '{}'".format(quote(resize_script_path), quote(gcp_path), json.dumps(dict)), shell=True)

|

||||

with open(gcp_path, 'w') as f:

|

||||

f.write(new_gcp_content.decode('utf-8'))

|

||||

logger.info("Resized GCP file {}".format(gcp_path))

|

||||

return gcp_path

|

||||

except subprocess.CalledProcessError as e:

|

||||

logger.warning("Could not resize GCP file {}: {}".format(gcp_path, str(e)))

|

||||

return None

|

||||

|

||||

class Meta:

|

||||

permissions = (

|

||||

('view_task', 'Can view task'),

|

||||

)

|

||||

|

||||

|

|

|

|||

|

|

@ -1,3 +1,4 @@

|

|||

CANCEL = 1

|

||||

REMOVE = 2

|

||||

RESTART = 3

|

||||

RESTART = 3

|

||||

RESIZE = 4

|

||||

|

|

|

|||

|

|

@ -0,0 +1,4 @@

|

|||

from .plugin_base import PluginBase

|

||||

from .menu import Menu

|

||||

from .mount_point import MountPoint

|

||||

from .functions import *

|

||||

|

|

@ -0,0 +1,111 @@

|

|||

import os

|

||||

import logging

|

||||

import importlib

|

||||

|

||||

import django

|

||||

import json

|

||||

from django.conf.urls import url

|

||||

from functools import reduce

|

||||

|

||||

from webodm import settings

|

||||

|

||||

logger = logging.getLogger('app.logger')

|

||||

|

||||

def register_plugins():

|

||||

for plugin in get_active_plugins():

|

||||

plugin.register()

|

||||

logger.info("Registered {}".format(plugin))

|

||||

|

||||

|

||||

def get_url_patterns():

|

||||

"""

|

||||

@return the patterns to expose the /public directory of each plugin (if needed)

|

||||

"""

|

||||

url_patterns = []

|

||||

for plugin in get_active_plugins():

|

||||

for mount_point in plugin.mount_points():

|

||||

url_patterns.append(url('^plugins/{}/{}'.format(plugin.get_name(), mount_point.url),

|

||||

mount_point.view,

|

||||

*mount_point.args,

|

||||

**mount_point.kwargs))

|

||||

|

||||

if plugin.has_public_path():

|

||||

url_patterns.append(url('^plugins/{}/(.*)'.format(plugin.get_name()),

|

||||

django.views.static.serve,

|

||||

{'document_root': plugin.get_path("public")}))

|

||||

|

||||

|

||||

return url_patterns

|

||||

|

||||

plugins = None

|

||||

def get_active_plugins():

|

||||

# Cache plugins search

|

||||

global plugins

|

||||

if plugins != None: return plugins

|

||||

|

||||

plugins = []

|

||||

plugins_path = get_plugins_path()

|

||||

|

||||

for dir in [d for d in os.listdir(plugins_path) if os.path.isdir(plugins_path)]:

|

||||

# Each plugin must have a manifest.json and a plugin.py

|

||||

plugin_path = os.path.join(plugins_path, dir)

|

||||

manifest_path = os.path.join(plugin_path, "manifest.json")

|

||||

pluginpy_path = os.path.join(plugin_path, "plugin.py")

|

||||

disabled_path = os.path.join(plugin_path, "disabled")

|

||||

|

||||

# Do not load test plugin unless we're in test mode

|

||||

if os.path.basename(plugin_path) == 'test' and not settings.TESTING:

|

||||

continue

|

||||

|

||||

if not os.path.isfile(manifest_path) or not os.path.isfile(pluginpy_path):

|

||||

logger.warning("Found invalid plugin in {}".format(plugin_path))

|

||||

continue

|

||||

|

||||

# Plugins that have a "disabled" file are disabled

|

||||

if os.path.isfile(disabled_path):

|

||||

continue

|

||||

|

||||

# Read manifest

|

||||

with open(manifest_path) as manifest_file:

|

||||

manifest = json.load(manifest_file)

|

||||

if 'webodmMinVersion' in manifest:

|

||||

min_version = manifest['webodmMinVersion']

|

||||

|

||||

if versionToInt(min_version) > versionToInt(settings.VERSION):

|

||||

logger.warning("In {} webodmMinVersion is set to {} but WebODM version is {}. Plugin will not be loaded. Update WebODM.".format(manifest_path, min_version, settings.VERSION))

|

||||

continue

|

||||

|

||||

# Instantiate the plugin

|

||||

try:

|

||||

module = importlib.import_module("plugins.{}".format(dir))

|

||||

cls = getattr(module, "Plugin")

|

||||

plugins.append(cls())

|

||||

except Exception as e:

|

||||

logger.warning("Failed to instantiate plugin {}: {}".format(dir, e))

|

||||

|

||||

return plugins

|

||||

|

||||

|

||||

def get_plugins_path():

|

||||

current_path = os.path.dirname(os.path.realpath(__file__))

|

||||

return os.path.abspath(os.path.join(current_path, "..", "..", "plugins"))

|

||||

|

||||

|

||||

def versionToInt(version):

|

||||

"""

|

||||

Converts a WebODM version string (major.minor.build) to a integer value

|

||||

for comparison

|

||||

>>> versionToInt("1.2.3")

|

||||

100203

|

||||

>>> versionToInt("1")

|

||||

100000

|

||||

>>> versionToInt("1.2.3.4")

|

||||

100203

|

||||

>>> versionToInt("wrong")

|

||||

-1

|

||||

"""

|

||||

|

||||

try:

|

||||

return sum([reduce(lambda mult, ver: mult * ver, i) for i in zip([100000, 100, 1], map(int, version.split(".")))])

|

||||

except:

|

||||

return -1

|

||||

|

|

@ -0,0 +1,22 @@

|

|||

class Menu:

|

||||

def __init__(self, label, link = "javascript:void(0)", css_icon = 'fa fa-caret-right fa-fw', submenu = []):

|

||||

"""

|

||||

Create a menu

|

||||

:param label: text shown in entry

|

||||

:param css_icon: class used for showing an icon (for example, "fa fa-wrench")

|

||||

:param link: link of entry (use "#" or "javascript:void(0);" for no action)

|

||||

:param submenu: list of Menu items

|

||||

"""

|

||||

super().__init__()

|

||||

|

||||

self.label = label

|

||||

self.css_icon = css_icon

|

||||

self.link = link

|

||||

self.submenu = submenu

|

||||

|

||||

if (self.has_submenu()):

|

||||

self.link = "#"

|

||||

|

||||

|

||||

def has_submenu(self):

|

||||

return len(self.submenu) > 0

|

||||

|

|

@ -0,0 +1,17 @@

|

|||

import re

|

||||

|

||||

class MountPoint:

|

||||

def __init__(self, url, view, *args, **kwargs):

|

||||

"""

|

||||

|

||||

:param url: path to mount this view to, relative to plugins directory

|

||||

:param view: Django view

|

||||

:param args: extra args to pass to url() call

|

||||

:param kwargs: extra kwargs to pass to url() call

|

||||

"""

|

||||

super().__init__()

|

||||

|

||||

self.url = re.sub(r'^/+', '', url) # remove leading slashes

|

||||

self.view = view

|

||||

self.args = args

|

||||

self.kwargs = kwargs

|

||||

|

|

@ -0,0 +1,85 @@

|

|||

import logging, os, sys

|

||||

from abc import ABC

|

||||

|

||||

logger = logging.getLogger('app.logger')

|

||||

|

||||

class PluginBase(ABC):

|

||||

def __init__(self):

|

||||

self.name = self.get_module_name().split(".")[-2]

|

||||

|

||||

def register(self):

|

||||

pass

|

||||

|

||||

def get_path(self, *paths):

|

||||

"""

|

||||

Gets the path of the directory of the plugin, optionally chained with paths

|

||||

:return: path

|

||||

"""

|

||||

return os.path.join(os.path.dirname(sys.modules[self.get_module_name()].__file__), *paths)

|

||||

|

||||

def get_name(self):

|

||||

"""

|

||||

:return: Name of current module (reflects the directory in which this plugin is stored)

|

||||

"""

|

||||

return self.name

|

||||

|

||||

def get_module_name(self):

|

||||

return self.__class__.__module__

|

||||

|

||||

def get_include_js_urls(self):

|

||||

return [self.public_url(js_file) for js_file in self.include_js_files()]

|

||||

|

||||

def get_include_css_urls(self):

|

||||

return [self.public_url(css_file) for css_file in self.include_css_files()]

|

||||

|

||||

def public_url(self, path):

|

||||

"""

|

||||

:param path: unix-style path

|

||||

:return: Path that can be accessed via a URL (from the browser), relative to plugins/<yourplugin>/public

|

||||

"""

|

||||

return "/plugins/{}/{}".format(self.get_name(), path)

|

||||

|

||||

def template_path(self, path):

|

||||

"""

|

||||

:param path: unix-style path

|

||||

:return: path used to reference Django templates for a plugin

|

||||

"""

|

||||

return "plugins/{}/templates/{}".format(self.get_name(), path)

|

||||

|

||||

def has_public_path(self):

|

||||

return os.path.isdir(self.get_path("public"))

|

||||

|

||||

def include_js_files(self):

|

||||

"""

|

||||

Should be overriden by plugins to communicate

|

||||

which JS files should be included in the WebODM interface

|

||||

All paths are relative to a plugin's /public folder.

|

||||

"""

|

||||

return []

|

||||

|

||||

def include_css_files(self):

|

||||

"""

|

||||

Should be overriden by plugins to communicate

|

||||

which CSS files should be included in the WebODM interface

|

||||

All paths are relative to a plugin's /public folder.

|

||||

"""

|

||||

return []

|

||||

|

||||

def main_menu(self):

|

||||

"""

|

||||

Should be overriden by plugins that want to add

|

||||

items to the side menu.

|

||||

:return: [] of Menu objects

|

||||

"""

|

||||

return []

|

||||

|

||||

def mount_points(self):

|

||||

"""

|

||||

Should be overriden by plugins that want to connect

|

||||

custom Django views

|

||||

:return: [] of MountPoint objects

|

||||

"""

|

||||

return []

|

||||

|

||||

def __str__(self):

|

||||

return "[{}]".format(self.get_module_name())

|

||||

|

|

@ -0,0 +1,5 @@

|

|||

{% extends "app/logged_in_base.html" %}

|

||||

|

||||

{% block content %}

|

||||

Hello World! Override me.

|

||||

{% endblock %}

|

||||

|

|

@ -1,96 +0,0 @@

|

|||

import logging

|

||||

import traceback

|

||||

from multiprocessing.dummy import Pool as ThreadPool

|

||||

from threading import Lock

|

||||

|

||||

from apscheduler.schedulers import SchedulerAlreadyRunningError, SchedulerNotRunningError

|

||||

from apscheduler.schedulers.background import BackgroundScheduler

|

||||

from django import db

|

||||

from django.db.models import Q, Count

|

||||

from webodm import settings

|

||||

|

||||

from app.models import Task, Project

|

||||

from nodeodm import status_codes

|

||||

from nodeodm.models import ProcessingNode

|

||||

from app.background import background

|

||||

|

||||

logger = logging.getLogger('app.logger')

|

||||

scheduler = BackgroundScheduler({

|

||||

'apscheduler.job_defaults.coalesce': 'true',

|

||||

'apscheduler.job_defaults.max_instances': '3',

|

||||

})

|

||||

|

||||

@background

|

||||

def update_nodes_info():

|

||||

processing_nodes = ProcessingNode.objects.all()

|

||||

for processing_node in processing_nodes:

|

||||

processing_node.update_node_info()

|

||||

|

||||

tasks_mutex = Lock()

|

||||

|

||||

@background

|

||||

def process_pending_tasks():

|

||||

tasks = []

|

||||

try:

|

||||

tasks_mutex.acquire()

|

||||

|

||||

# All tasks that have a processing node assigned

|

||||

# Or that need one assigned (via auto)

|

||||

# or tasks that need a status update

|

||||

# or tasks that have a pending action

|

||||

# and that are not locked (being processed by another thread)

|

||||

tasks = Task.objects.filter(Q(processing_node__isnull=True, auto_processing_node=True) |

|

||||

Q(Q(status=None) | Q(status__in=[status_codes.QUEUED, status_codes.RUNNING]), processing_node__isnull=False) |

|

||||

Q(pending_action__isnull=False)).exclude(Q(processing_lock=True))

|

||||

for task in tasks:

|

||||

task.processing_lock = True

|

||||

task.save()

|

||||

finally:

|

||||

tasks_mutex.release()

|

||||

|

||||

def process(task):

|

||||

try:

|

||||

task.process()

|

||||

except Exception as e:

|

||||

logger.error("Uncaught error! This is potentially bad. Please report it to http://github.com/OpenDroneMap/WebODM/issues: {} {}".format(e, traceback.format_exc()))

|

||||

if settings.TESTING: raise e

|

||||

finally:

|

||||

# Might have been deleted

|

||||

if task.pk is not None:

|

||||

task.processing_lock = False

|

||||

task.save()

|

||||

|

||||

db.connections.close_all()

|

||||

|

||||

if tasks.count() > 0:

|

||||

pool = ThreadPool(tasks.count())

|

||||

pool.map(process, tasks, chunksize=1)

|

||||

pool.close()

|

||||

pool.join()

|

||||

|

||||

|

||||

def cleanup_projects():

|

||||

# Delete all projects that are marked for deletion

|

||||

# and that have no tasks left

|

||||

total, count_dict = Project.objects.filter(deleting=True).annotate(

|

||||

tasks_count=Count('task')

|

||||

).filter(tasks_count=0).delete()

|

||||

if total > 0 and 'app.Project' in count_dict:

|

||||

logger.info("Deleted {} projects".format(count_dict['app.Project']))

|

||||

|

||||

def setup():

|

||||

try:

|

||||

scheduler.start()

|

||||

scheduler.add_job(update_nodes_info, 'interval', seconds=30)

|

||||

scheduler.add_job(process_pending_tasks, 'interval', seconds=5)

|

||||

scheduler.add_job(cleanup_projects, 'interval', seconds=60)

|

||||

except SchedulerAlreadyRunningError:

|

||||

logger.warning("Scheduler already running (this is OK while testing)")

|

||||

|

||||

def teardown():

|

||||

logger.info("Stopping scheduler...")

|

||||

try:

|

||||

scheduler.shutdown()

|

||||

logger.info("Scheduler stopped")

|

||||

except SchedulerNotRunningError:

|

||||

logger.warning("Scheduler not running")

|

||||

|

|

@ -0,0 +1,25 @@

|

|||

#!/usr/bin/env node

|

||||

|

||||

const fs = require('fs');

|

||||

const Gcp = require('../static/app/js/classes/Gcp');

|

||||

const argv = process.argv.slice(2);

|

||||

function die(s){

|

||||

console.log(s);

|

||||

process.exit(1);

|

||||

}

|

||||

if (argv.length != 2){

|

||||

die(`Usage: ./resize_gcp.js <path/to/gcp_file.txt> <JSON encoded image-->ratio map>`);

|

||||

}

|

||||

|

||||

const [inputFile, jsonMap] = argv;

|

||||

if (!fs.existsSync(inputFile)){

|

||||

die('File does not exist: ' + inputFile);

|

||||

}

|

||||

const originalGcp = new Gcp(fs.readFileSync(inputFile, 'utf8'));

|

||||

try{

|

||||

const map = JSON.parse(jsonMap);

|

||||

const newGcp = originalGcp.resize(map, true);

|

||||

console.log(newGcp.toString());

|

||||

}catch(e){

|

||||

die("Not a valid JSON string: " + jsonMap);

|

||||

}

|

||||

|

|

@ -259,4 +259,9 @@ footer{

|

|||

&:first-child{

|

||||

border-top-width: 1px;

|

||||

}

|

||||

}

|

||||

|

||||

.full-height{

|

||||

height: calc(100vh - 110px);

|

||||

padding-bottom: 12px;

|

||||

}

|

||||

|

|

@ -9,6 +9,9 @@ ul#side-menu.nav a,

|

|||

{

|

||||

color: theme("primary");

|

||||

}

|

||||

.theme-border-primary{

|

||||

border-color: theme("primary");

|

||||

}

|

||||

.tooltip{

|

||||

.tooltip-inner{

|

||||

background-color: theme("primary");

|

||||

|

|

@ -162,6 +165,9 @@ footer,

|

|||

.popover-title{

|

||||

border-bottom-color: theme("border");

|

||||

}

|

||||

.theme-border{

|

||||

border-color: theme("border");

|

||||

}

|

||||

|

||||

/* Highlight */

|

||||

.task-list-item:nth-child(odd),

|

||||

|

|

|

|||

|

Po Szerokość: | Wysokość: | Rozmiar: 3.5 KiB |

|

Po Szerokość: | Wysokość: | Rozmiar: 1.4 KiB |

|

|

@ -0,0 +1,58 @@

|

|||

class Gcp{

|

||||

constructor(text){

|

||||

this.text = text;

|

||||

}

|

||||

|

||||

// Scale the image location of GPCs

|

||||

// according to the values specified in the map

|

||||

// @param imagesRatioMap {Object} object in which keys are image names and values are scaling ratios

|

||||

// example: {'DJI_0018.jpg': 0.5, 'DJI_0019.JPG': 0.25}

|

||||

// @return {Gcp} a new GCP object

|

||||

resize(imagesRatioMap, muteWarnings = false){

|

||||

// Make sure dict is all lower case and values are floats

|

||||

let ratioMap = {};

|

||||

for (let k in imagesRatioMap) ratioMap[k.toLowerCase()] = parseFloat(imagesRatioMap[k]);

|

||||

|

||||

const lines = this.text.split(/\r?\n/);

|

||||

let output = "";

|

||||

|

||||

if (lines.length > 0){

|

||||

output += lines[0] + '\n'; // coordinate system description

|

||||

|

||||

for (let i = 1; i < lines.length; i++){

|

||||

let line = lines[i].trim();

|

||||

if (line !== ""){

|

||||

let parts = line.split(/\s+/);

|

||||

if (parts.length >= 6){

|

||||

let [x, y, z, px, py, imagename, ...extracols] = parts;

|

||||

let ratio = ratioMap[imagename.toLowerCase()];

|

||||

|

||||

px = parseFloat(px);

|

||||

py = parseFloat(py);

|

||||

|

||||

if (ratio !== undefined){

|

||||

px *= ratio;

|

||||

py *= ratio;

|

||||

}else{

|

||||

if (!muteWarnings) console.warn(`${imagename} not found in ratio map. Are you missing some images?`);

|

||||

}

|

||||

|

||||

let extra = extracols.length > 0 ? ' ' + extracols.join(' ') : '';

|

||||

output += `${x} ${y} ${z} ${px.toFixed(2)} ${py.toFixed(2)} ${imagename}${extra}\n`;

|

||||

}else{

|

||||

if (!muteWarnings) console.warn(`Invalid GCP format at line ${i}: ${line}`);

|

||||

output += line + '\n';

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

return new Gcp(output);

|

||||

}

|

||||

|

||||

toString(){

|

||||

return this.text;

|

||||

}

|

||||

}

|

||||

|

||||

module.exports = Gcp;

|

||||

|

|

@ -1,6 +1,7 @@

|

|||

const CANCEL = 1,

|

||||

REMOVE = 2,

|

||||

RESTART = 3;

|

||||

RESTART = 3,

|

||||

RESIZE = 4;

|

||||

|

||||

let pendingActions = {

|

||||

[CANCEL]: {

|

||||

|

|

@ -11,6 +12,9 @@ let pendingActions = {

|

|||

},

|

||||

[RESTART]: {

|

||||

descr: "Restarting..."

|

||||

},

|

||||

[RESIZE]: {

|

||||

descr: "Resizing images..."

|

||||

}

|

||||

};

|

||||

|

||||

|

|

@ -18,6 +22,7 @@ export default {

|

|||

CANCEL: CANCEL,

|

||||

REMOVE: REMOVE,

|

||||

RESTART: RESTART,

|

||||

RESIZE: RESIZE,

|

||||

|

||||

description: function(pendingAction) {

|

||||

if (pendingActions[pendingAction]) return pendingActions[pendingAction].descr;

|

||||

|

|

|

|||

|

|

@ -0,0 +1,26 @@

|

|||

const dict = [

|

||||

{k: 'NO', v: 0, human: "No"}, // Don't resize

|

||||

{k: 'YES', v: 1, human: "Yes"}, // Resize on server

|

||||

{k: 'YESINBROWSER', v: 2, human: "Yes (In browser)"} // Resize on browser

|

||||

];

|

||||

|

||||

const exp = {

|

||||

all: () => dict.map(d => d.v),

|

||||

fromString: (s) => {

|

||||

let v = parseInt(s);

|

||||

if (!isNaN(v) && v >= 0 && v <= 2) return v;

|

||||

else return 0;

|

||||

},

|

||||

toHuman: (v) => {

|

||||

for (let i in dict){

|

||||

if (dict[i].v === v) return dict[i].human;

|

||||

}

|

||||

throw new Error("Invalid value: " + v);

|

||||

}

|

||||

};

|

||||

dict.forEach(en => {

|

||||

exp[en.k] = en.v;

|

||||

});

|

||||

|

||||

export default exp;

|

||||

|

||||

|

|

@ -63,6 +63,23 @@ export default {

|

|||

parser.href = href;

|

||||

|

||||

return `${parser.protocol}//${parser.host}/${path}`;

|

||||

},

|

||||

|

||||

assert: function(condition, message) {

|

||||

if (!condition) {

|

||||

message = message || "Assertion failed";

|

||||

if (typeof Error !== "undefined") {

|

||||

throw new Error(message);

|

||||

}

|

||||

throw message; // Fallback

|

||||

}

|

||||

},

|

||||

|

||||

getCurrentScriptDir: function(){

|

||||

let scripts= document.getElementsByTagName('script');

|

||||

let path= scripts[scripts.length-1].src.split('?')[0]; // remove any ?query

|

||||

let mydir= path.split('/').slice(0, -1).join('/')+'/'; // remove last filename part of path

|

||||

return mydir;

|

||||

}

|

||||

};

|

||||

|

||||

|

|

|

|||

|

|

@ -0,0 +1,30 @@

|

|||

import { EventEmitter } from 'fbemitter';

|

||||

import ApiFactory from './ApiFactory';

|

||||

import Map from './Map';

|

||||

import $ from 'jquery';

|

||||

import SystemJS from 'SystemJS';

|

||||

|

||||

if (!window.PluginsAPI){

|

||||

const events = new EventEmitter();

|

||||

const factory = new ApiFactory(events);

|

||||

|

||||

SystemJS.config({

|

||||

baseURL: '/plugins',

|

||||

map: {

|

||||

css: '/static/app/js/vendor/css.js'

|

||||

},

|

||||

meta: {

|

||||

'*.css': { loader: 'css' }

|

||||

}

|

||||

});

|

||||

|

||||

window.PluginsAPI = {

|

||||

Map: factory.create(Map),

|

||||

|

||||

SystemJS,

|

||||

events

|

||||

};

|

||||

}

|

||||

|

||||

export default window.PluginsAPI;

|

||||

|

||||

|

|

@ -0,0 +1,53 @@

|

|||

import SystemJS from 'SystemJS';

|

||||

|

||||

export default class ApiFactory{

|

||||

// @param events {EventEmitter}

|

||||

constructor(events){

|

||||

this.events = events;

|

||||

}

|

||||

|

||||

// @param api {Object}

|

||||

create(api){

|

||||

|

||||

// Adds two functions to obj

|

||||

// - eventName

|

||||

// - triggerEventName

|

||||

// We could just use events, but methods

|

||||

// are more robust as we can detect more easily if

|

||||

// things break

|

||||

const addEndpoint = (obj, eventName, preTrigger = () => {}) => {

|

||||

obj[eventName] = (callbackOrDeps, callbackOrUndef) => {

|

||||

if (Array.isArray(callbackOrDeps)){

|

||||

// Deps

|

||||

// Load dependencies, then raise event as usual

|

||||

// by appending the dependencies to the argument list

|

||||

this.events.addListener(`${api.namespace}::${eventName}`, (...args) => {

|

||||

Promise.all(callbackOrDeps.map(dep => SystemJS.import(dep)))

|

||||

.then((...deps) => {

|

||||

callbackOrUndef(...(Array.from(args).concat(...deps)));

|

||||

});

|

||||

});

|

||||

}else{

|

||||

// Callback

|

||||

this.events.addListener(`${api.namespace}::${eventName}`, callbackOrDeps);

|

||||

}

|

||||

}

|

||||

|

||||

const triggerEventName = "trigger" + eventName[0].toUpperCase() + eventName.slice(1);

|

||||

|

||||

obj[triggerEventName] = (...args) => {

|

||||

preTrigger(...args);

|

||||

this.events.emit(`${api.namespace}::${eventName}`, ...args);

|

||||

};

|

||||

}

|

||||

|

||||

const obj = {};

|

||||

api.endpoints.forEach(endpoint => {

|

||||

if (!Array.isArray(endpoint)) endpoint = [endpoint];

|

||||

addEndpoint(obj, ...endpoint);

|

||||

});

|

||||

return obj;

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

|

|

@ -0,0 +1,17 @@

|

|||

import Utils from '../Utils';

|

||||

|

||||

const { assert } = Utils;

|

||||

|

||||

const leafletPreCheck = (options) => {

|

||||

assert(options.map !== undefined);

|

||||

};

|

||||

|

||||

export default {

|

||||

namespace: "Map",

|

||||

|

||||

endpoints: [

|

||||

["willAddControls", leafletPreCheck],

|

||||

["didAddControls", leafletPreCheck]

|

||||

]

|

||||

};

|

||||

|

||||

|

|

@ -1,12 +1,8 @@

|

|||

import React from 'react';

|

||||

import ReactDOMServer from 'react-dom/server';

|

||||

import ReactDOM from 'react-dom';

|

||||

import '../css/Map.scss';

|

||||

import 'leaflet/dist/leaflet.css';

|

||||

import Leaflet from 'leaflet';

|

||||

import async from 'async';

|

||||

import 'leaflet-measure/dist/leaflet-measure.css';

|

||||

import 'leaflet-measure/dist/leaflet-measure';

|

||||

import '../vendor/leaflet/L.Control.MousePosition.css';

|

||||

import '../vendor/leaflet/L.Control.MousePosition';

|

||||

import '../vendor/leaflet/Leaflet.Autolayers/css/leaflet.auto-layers.css';

|

||||

|

|

@ -17,6 +13,7 @@ import SwitchModeButton from './SwitchModeButton';

|

|||

import ShareButton from './ShareButton';

|

||||

import AssetDownloads from '../classes/AssetDownloads';

|

||||

import PropTypes from 'prop-types';

|

||||

import PluginsAPI from '../classes/plugins/API';

|

||||

|

||||

class Map extends React.Component {

|

||||

static defaultProps = {

|

||||

|

|

@ -174,16 +171,22 @@ class Map extends React.Component {

|

|||

|

||||

this.map = Leaflet.map(this.container, {

|

||||

scrollWheelZoom: true,

|

||||

positionControl: true

|

||||

positionControl: true,

|

||||

zoomControl: false

|

||||

});

|

||||

|

||||

const measureControl = Leaflet.control.measure({

|

||||

primaryLengthUnit: 'meters',

|

||||

secondaryLengthUnit: 'feet',

|

||||

primaryAreaUnit: 'sqmeters',

|

||||

secondaryAreaUnit: 'acres'

|

||||

PluginsAPI.Map.triggerWillAddControls({

|

||||

map: this.map

|

||||

});

|

||||

measureControl.addTo(this.map);

|

||||

|

||||

Leaflet.control.scale({

|

||||

maxWidth: 250,

|

||||

}).addTo(this.map);

|

||||

|

||||

//add zoom control with your options

|

||||

Leaflet.control.zoom({

|

||||

position:'bottomleft'

|

||||

}).addTo(this.map);

|

||||

|

||||

if (showBackground) {

|

||||

this.basemaps = {

|

||||

|

|

@ -216,10 +219,6 @@ class Map extends React.Component {

|

|||

}).addTo(this.map);

|

||||

|

||||

this.map.fitWorld();

|

||||

|

||||

Leaflet.control.scale({

|

||||

maxWidth: 250,

|

||||

}).addTo(this.map);

|

||||

this.map.attributionControl.setPrefix("");

|

||||

|

||||

this.loadImageryLayers(true).then(() => {

|

||||

|

|

@ -236,6 +235,13 @@ class Map extends React.Component {

|

|||

}

|

||||

});

|

||||

});

|

||||

|

||||

// PluginsAPI.events.addListener('Map::AddPanel', (e) => {

|

||||

// console.log("Received response: " + e);

|

||||

// });

|

||||

PluginsAPI.Map.triggerDidAddControls({

|

||||

map: this.map

|

||||

});

|

||||

}

|

||||

|

||||

componentDidUpdate(prevProps) {

|

||||

|

|

@ -269,6 +275,7 @@ class Map extends React.Component {

|

|||

return (

|

||||

<div style={{height: "100%"}} className="map">

|

||||

<ErrorMessage bind={[this, 'error']} />

|

||||

|

||||

<div

|

||||

style={{height: "100%"}}

|

||||

ref={(domNode) => (this.container = domNode)}

|

||||

|

|

|

|||

|

|

@ -3,6 +3,7 @@ import React from 'react';

|

|||

import EditTaskForm from './EditTaskForm';

|

||||

import PropTypes from 'prop-types';

|

||||

import Storage from '../classes/Storage';

|

||||

import ResizeModes from '../classes/ResizeModes';

|

||||

|

||||

class NewTaskPanel extends React.Component {

|

||||

static defaultProps = {

|

||||

|

|

@ -25,14 +26,14 @@ class NewTaskPanel extends React.Component {

|

|||

this.state = {

|

||||

name: props.name,

|

||||

editTaskFormLoaded: false,

|

||||

resize: Storage.getItem('do_resize') !== null ? Storage.getItem('do_resize') == "1" : true,

|

||||

resizeMode: Storage.getItem('resize_mode') === null ? ResizeModes.YES : ResizeModes.fromString(Storage.getItem('resize_mode')),

|

||||

resizeSize: parseInt(Storage.getItem('resize_size')) || 2048

|

||||

};

|

||||

|

||||

this.save = this.save.bind(this);

|

||||

this.handleFormTaskLoaded = this.handleFormTaskLoaded.bind(this);

|

||||

this.getTaskInfo = this.getTaskInfo.bind(this);

|

||||

this.setResize = this.setResize.bind(this);

|

||||

this.setResizeMode = this.setResizeMode.bind(this);

|

||||

this.handleResizeSizeChange = this.handleResizeSizeChange.bind(this);

|

||||

}

|

||||

|

||||

|

|

@ -40,7 +41,7 @@ class NewTaskPanel extends React.Component {

|

|||

e.preventDefault();

|

||||

this.taskForm.saveLastPresetToStorage();

|

||||

Storage.setItem('resize_size', this.state.resizeSize);

|

||||

Storage.setItem('do_resize', this.state.resize ? "1" : "0");

|

||||

Storage.setItem('resize_mode', this.state.resizeMode);

|

||||

if (this.props.onSave) this.props.onSave(this.getTaskInfo());

|

||||

}

|

||||

|

||||

|

|

@ -54,13 +55,14 @@ class NewTaskPanel extends React.Component {

|

|||

|

||||

getTaskInfo(){

|

||||

return Object.assign(this.taskForm.getTaskInfo(), {

|

||||

resizeTo: (this.state.resize && this.state.resizeSize > 0) ? this.state.resizeSize : null

|

||||

resizeSize: this.state.resizeSize,

|

||||

resizeMode: this.state.resizeMode